FLECKS by Freya Berkhout

A flex sensor tool for embodied interaction with audio.

Idea and concept

I have created a project concerned with touch and intuition — a tool that allows me to interact with music I have composed to explore new sonic opportunities within the bounds of those pieces. My conceptual framework was initially focused on conductive fabric and sustainable fashion, however through experimentation, I turned to flex sensors, which were responsive, reliable and most importantly, tactile and fun. As a result, my concept evolved to be about bringing embodied interaction into my composing process, and how I could tangibly affect compositions through using flex sensors for malleability. The pieces I have written are almost exclusively created using my voice, which has been an important part of my life and artistic practice since I was a child. I am drawn to the human voice because of how inherently human it is, and I find it to be a special and uniquely affective way of communicating, crossing cultural, language and geographical boundaries. Flecks allows me to physically interact with my compositions in a way that traditional musical instruments don’t allow. It was also important to me that the tangible experience of pressing the flex sensors feels apt in the choice of effects they had musically. After experimenting with different things such as reversing the tracks, using granular synthesis and chopping and playing samples, I felt that the time shifting and filter effects were the most natural and just felt right.

Process

My idea changed a lot throughout the process of experimenting for this project. I was originally looking at making a squishy box that could be interacted with to manipulate music, but as I explored the conductive fabric I had hoped to use, it became clear it was too volatile and unreliable to use for this project. As a result, I pivoted towards using flex sensors, which were a different method to achieve a similar usability experience of squeezing and pressing to cause sonic change. This project was all about experimenting with different ways of physically exploring my music, and I feel that my evolution from conductive fabrics to flex sensors has been a very valuable learning curve.

Technical

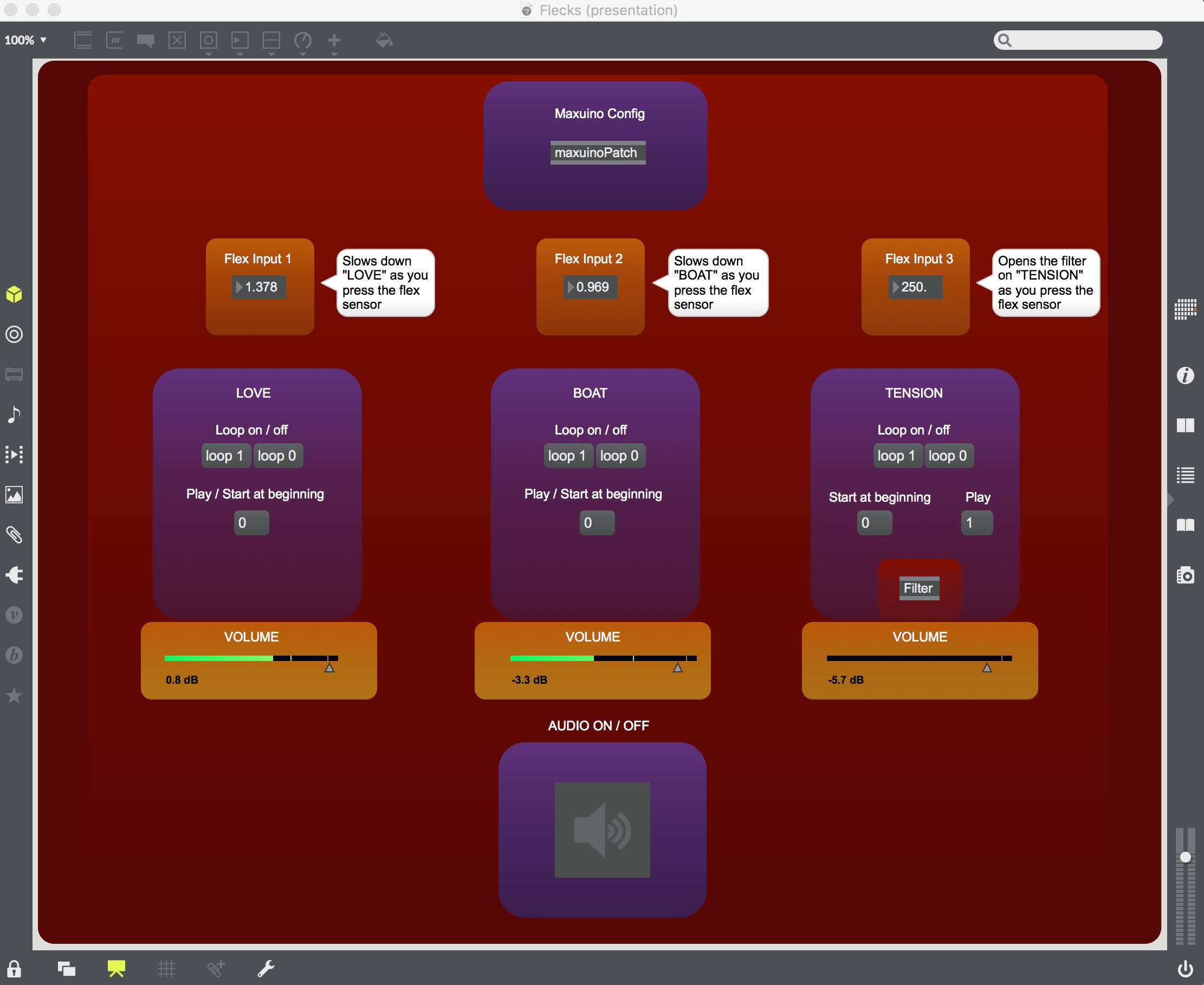

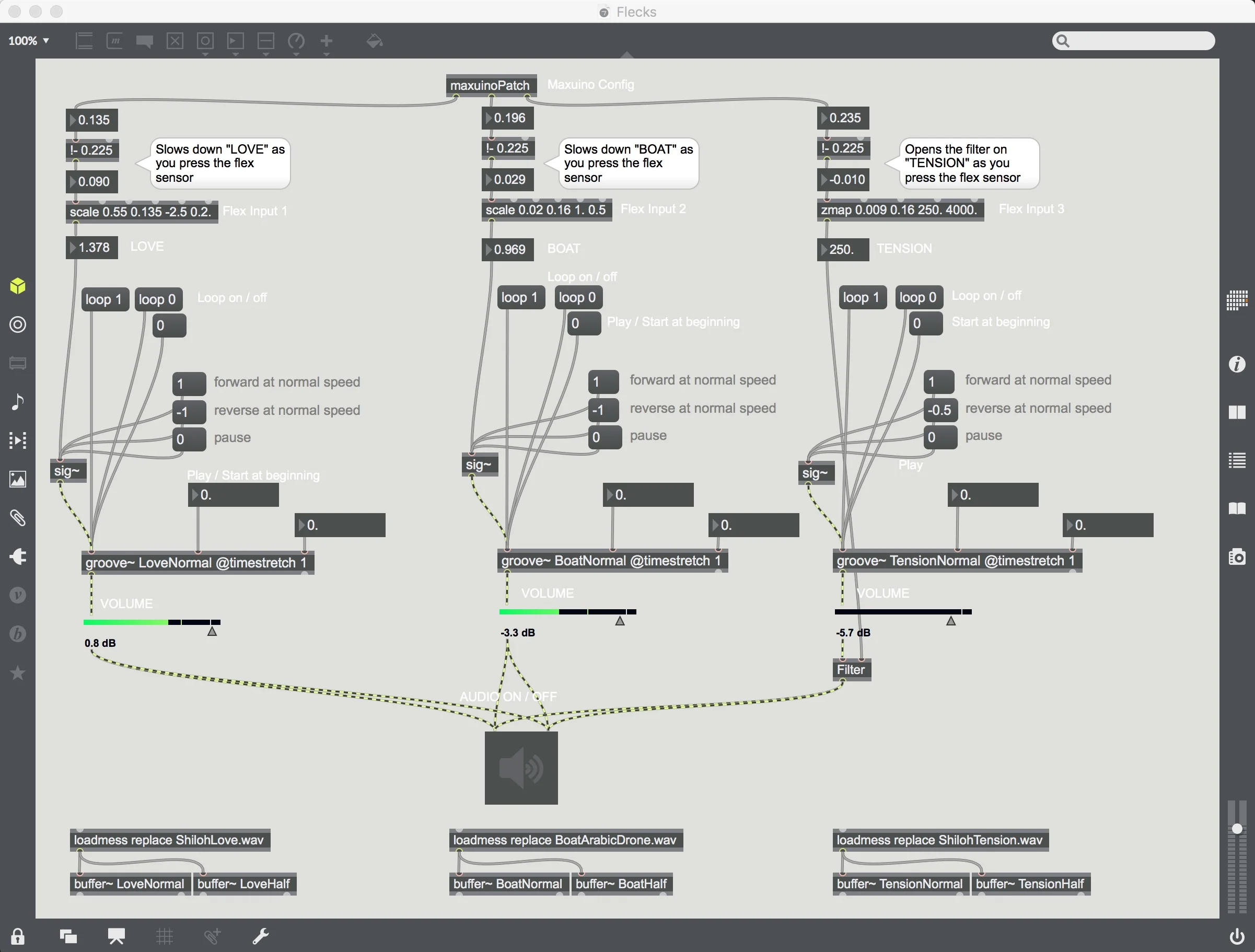

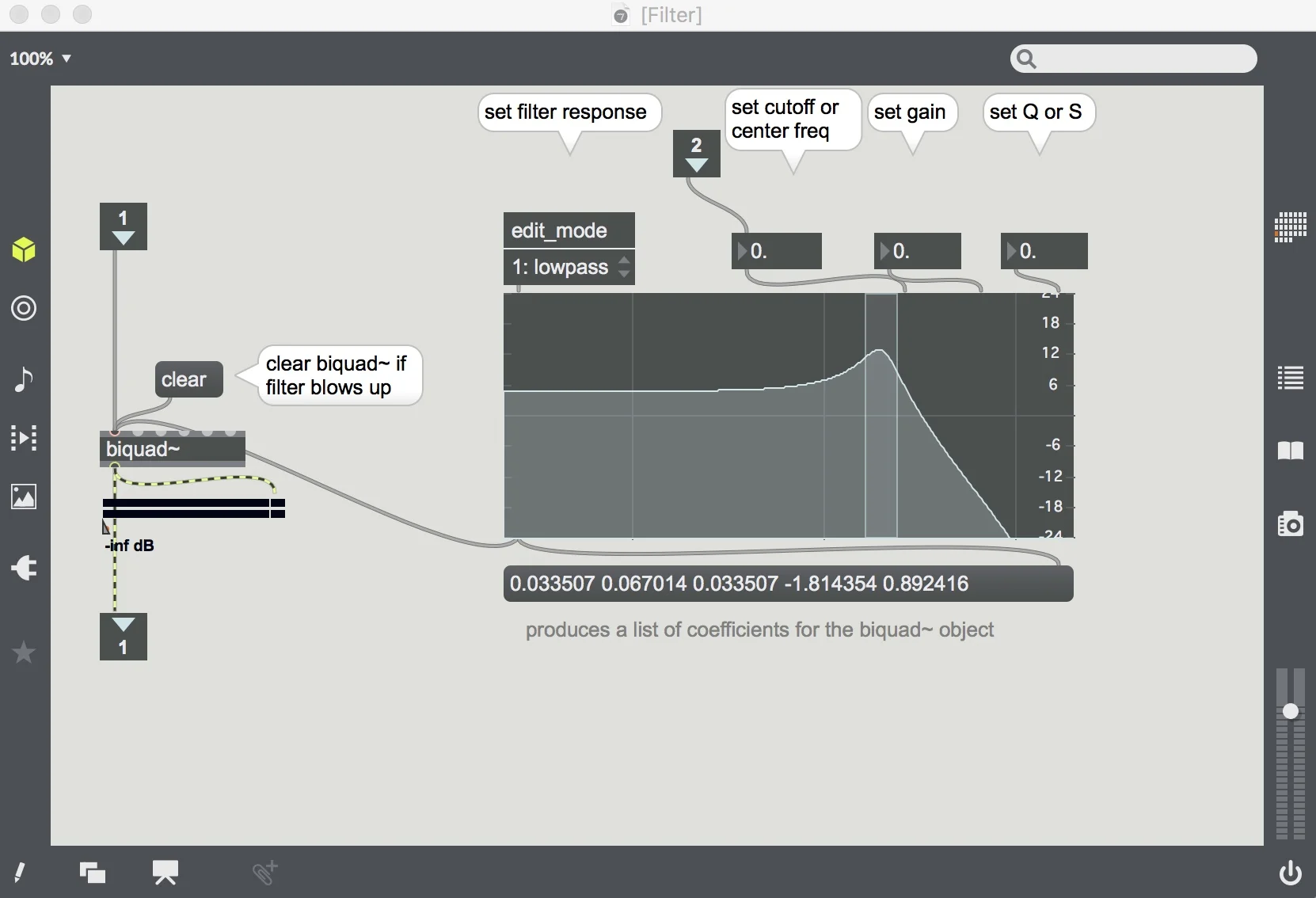

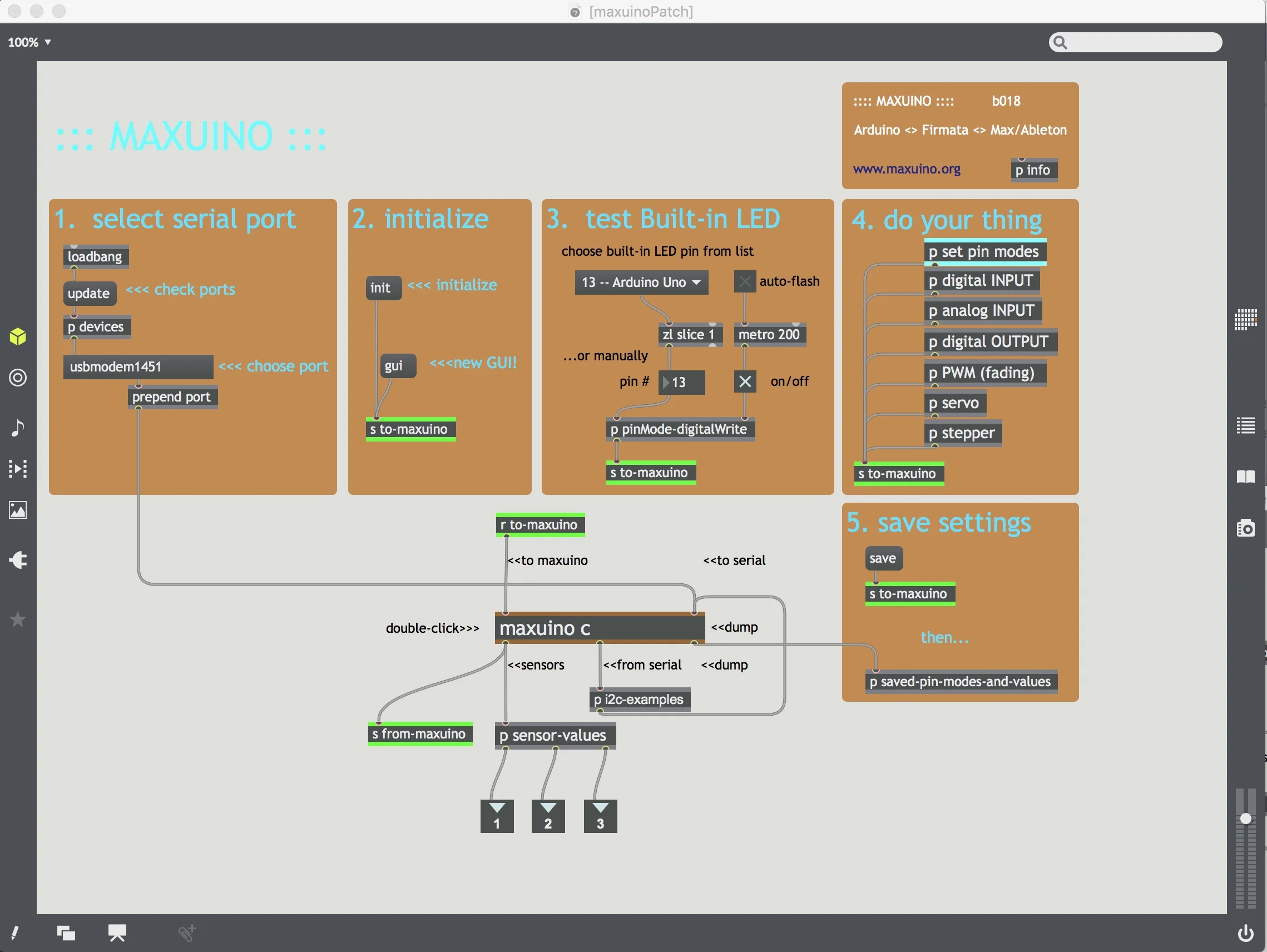

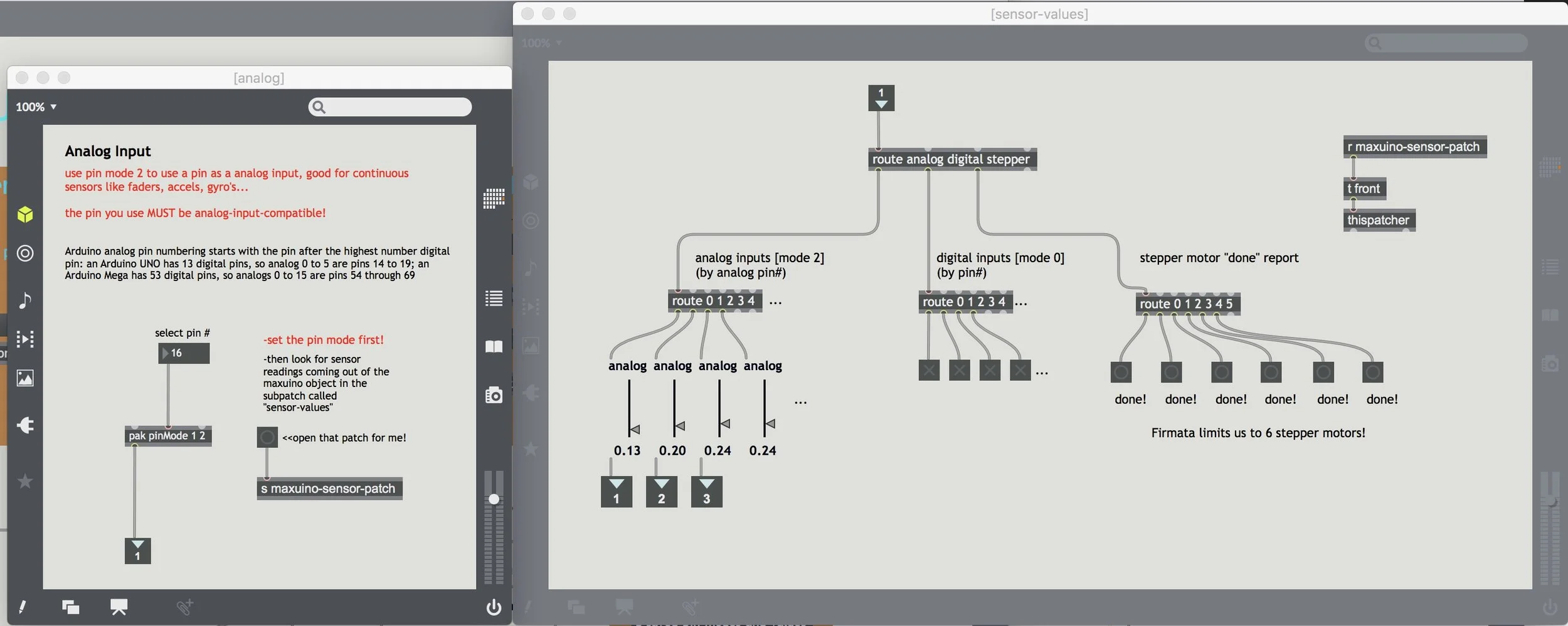

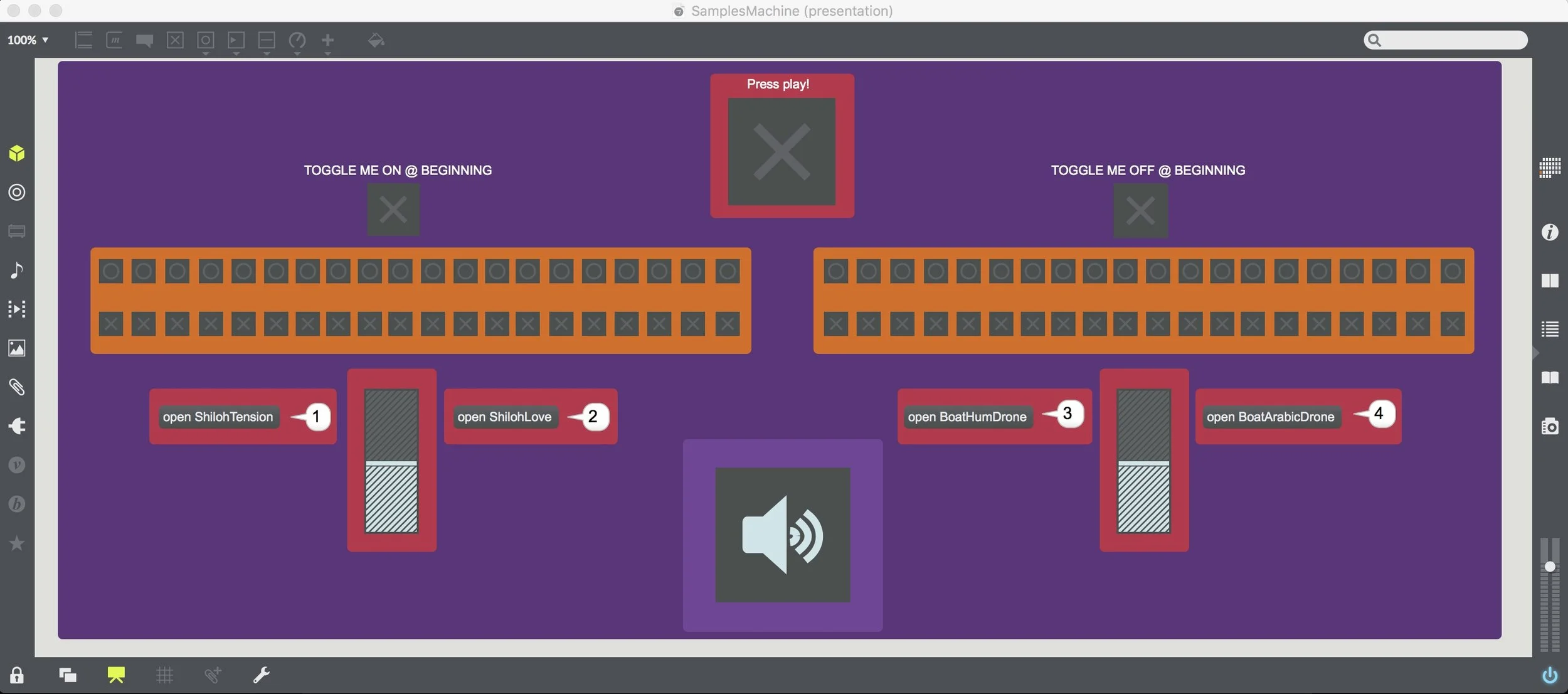

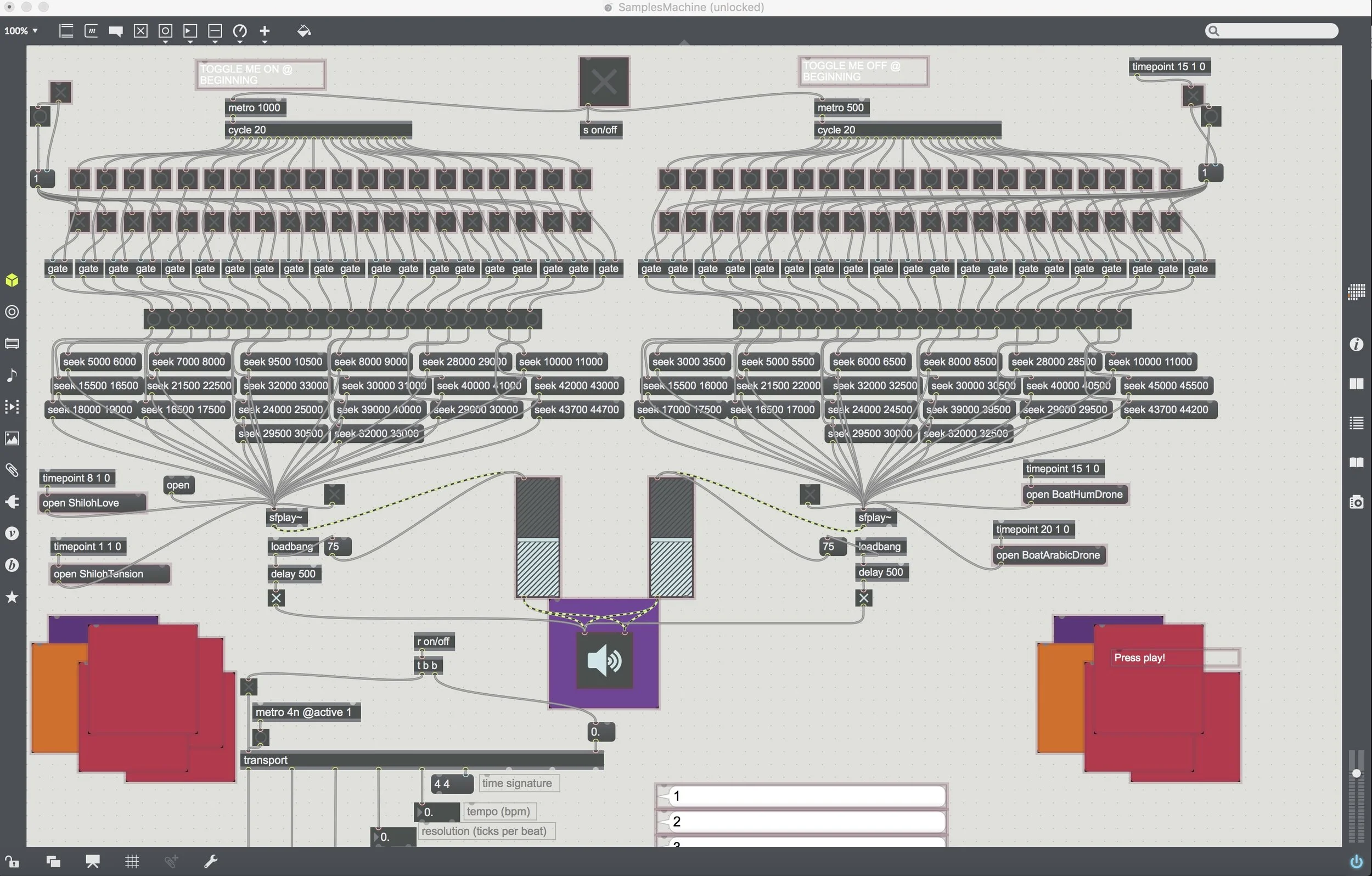

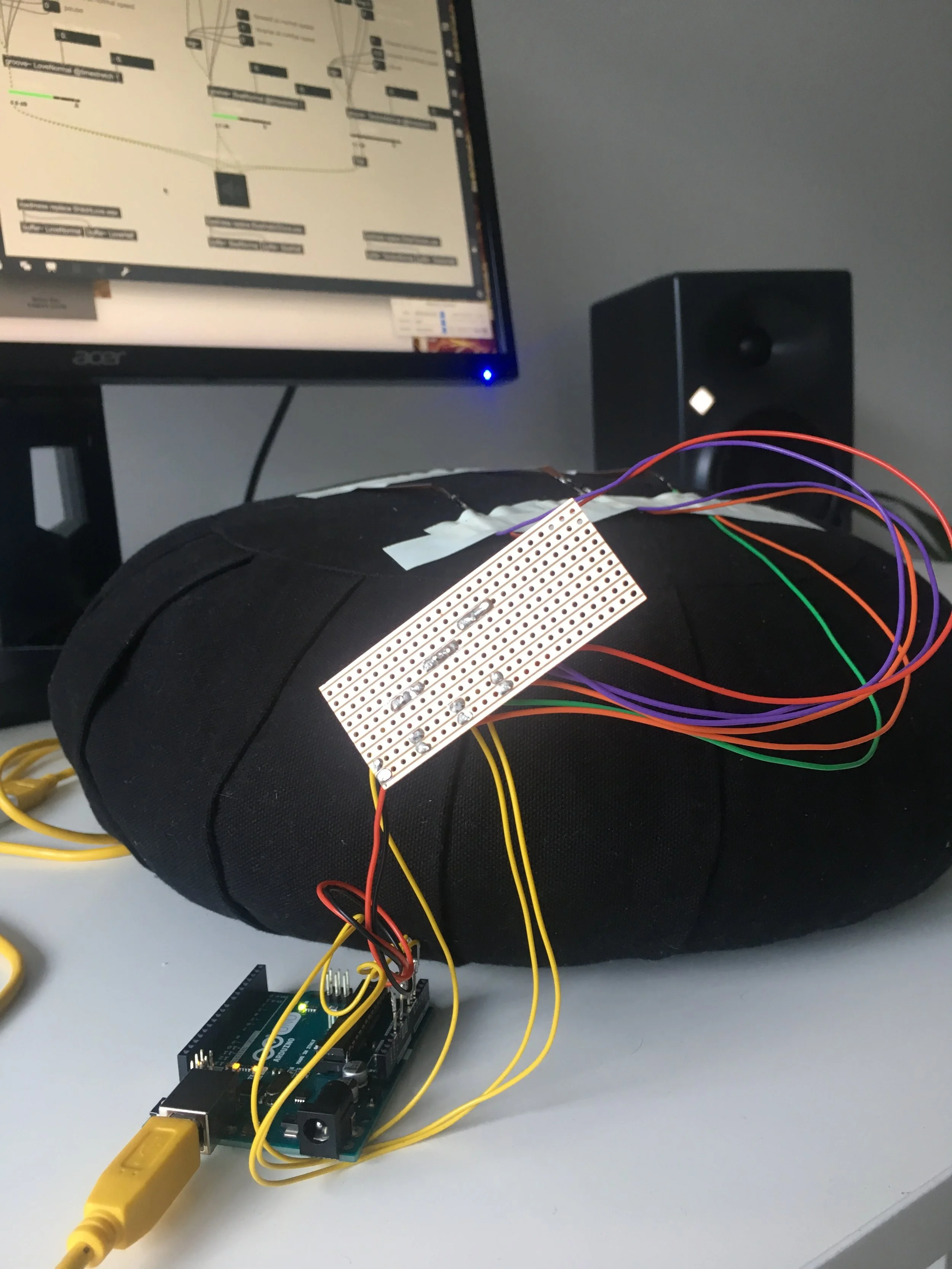

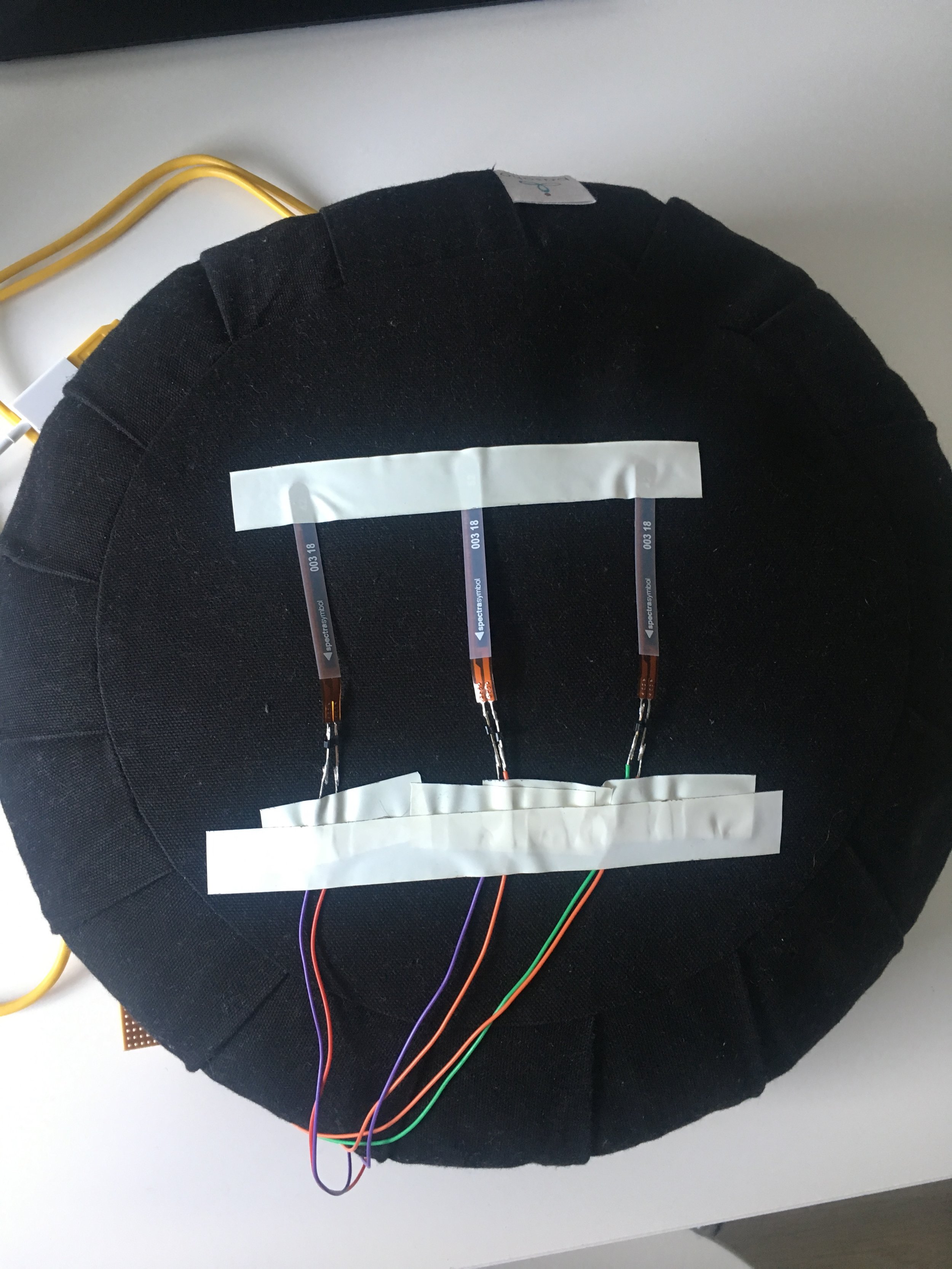

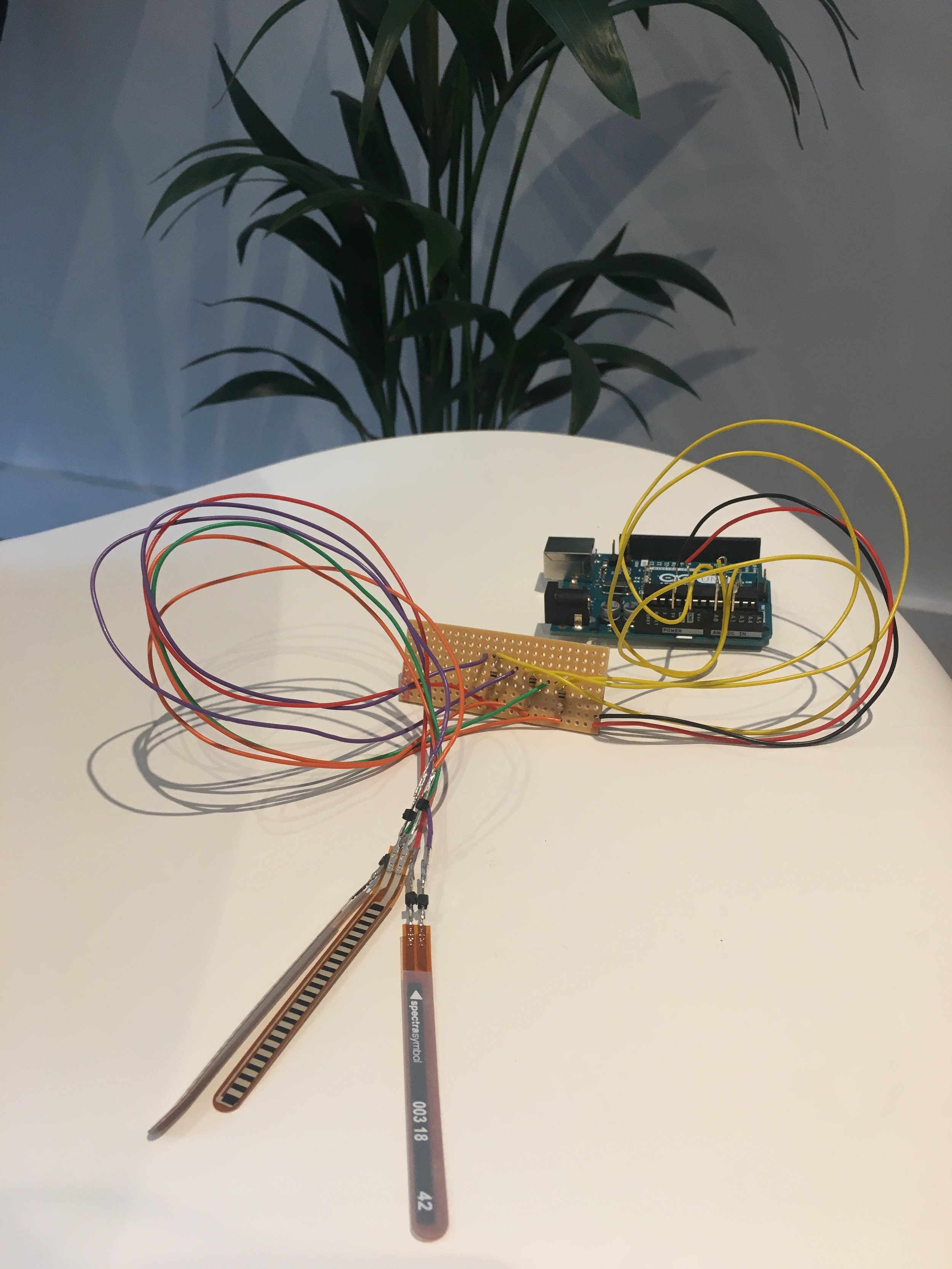

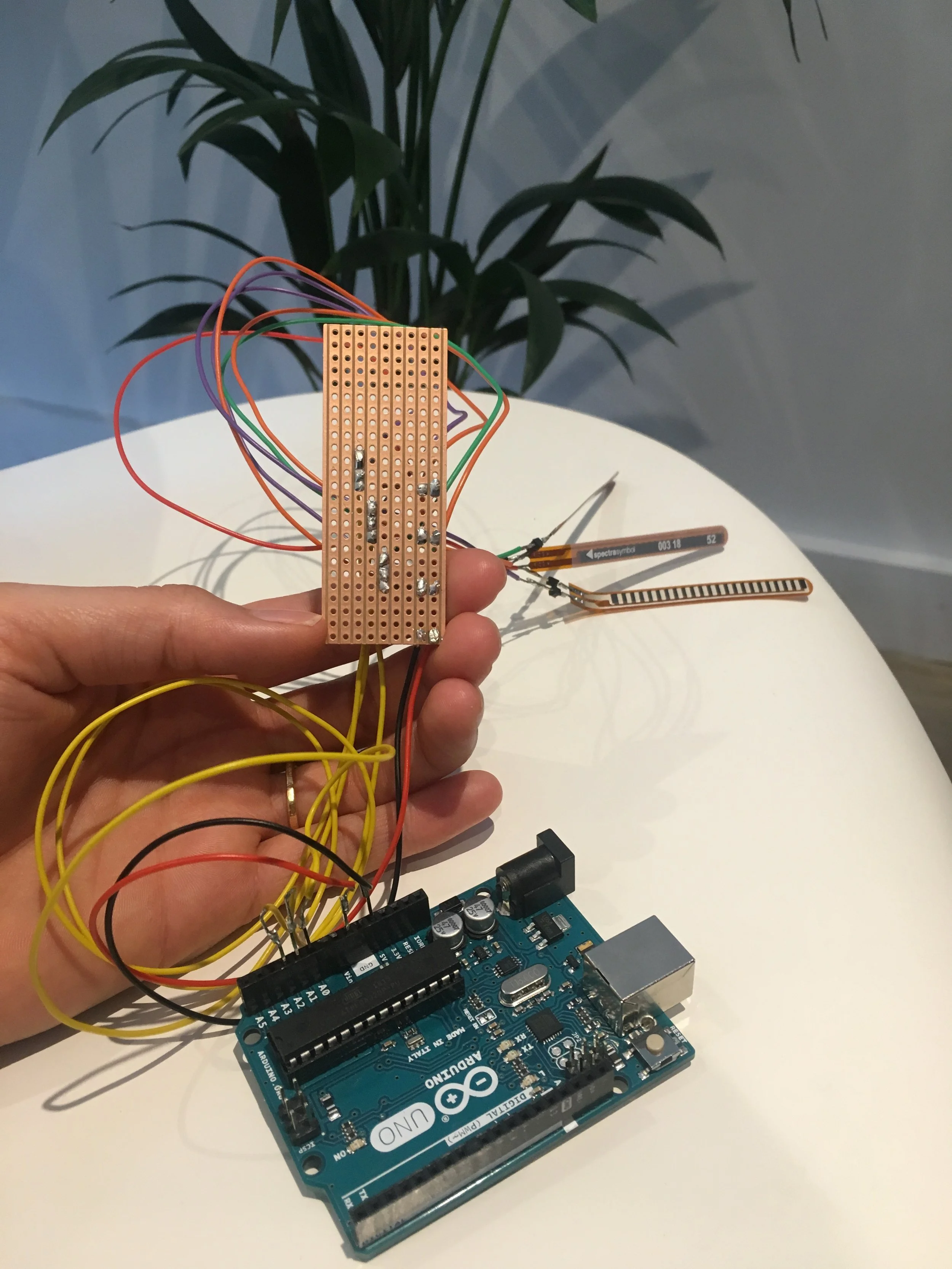

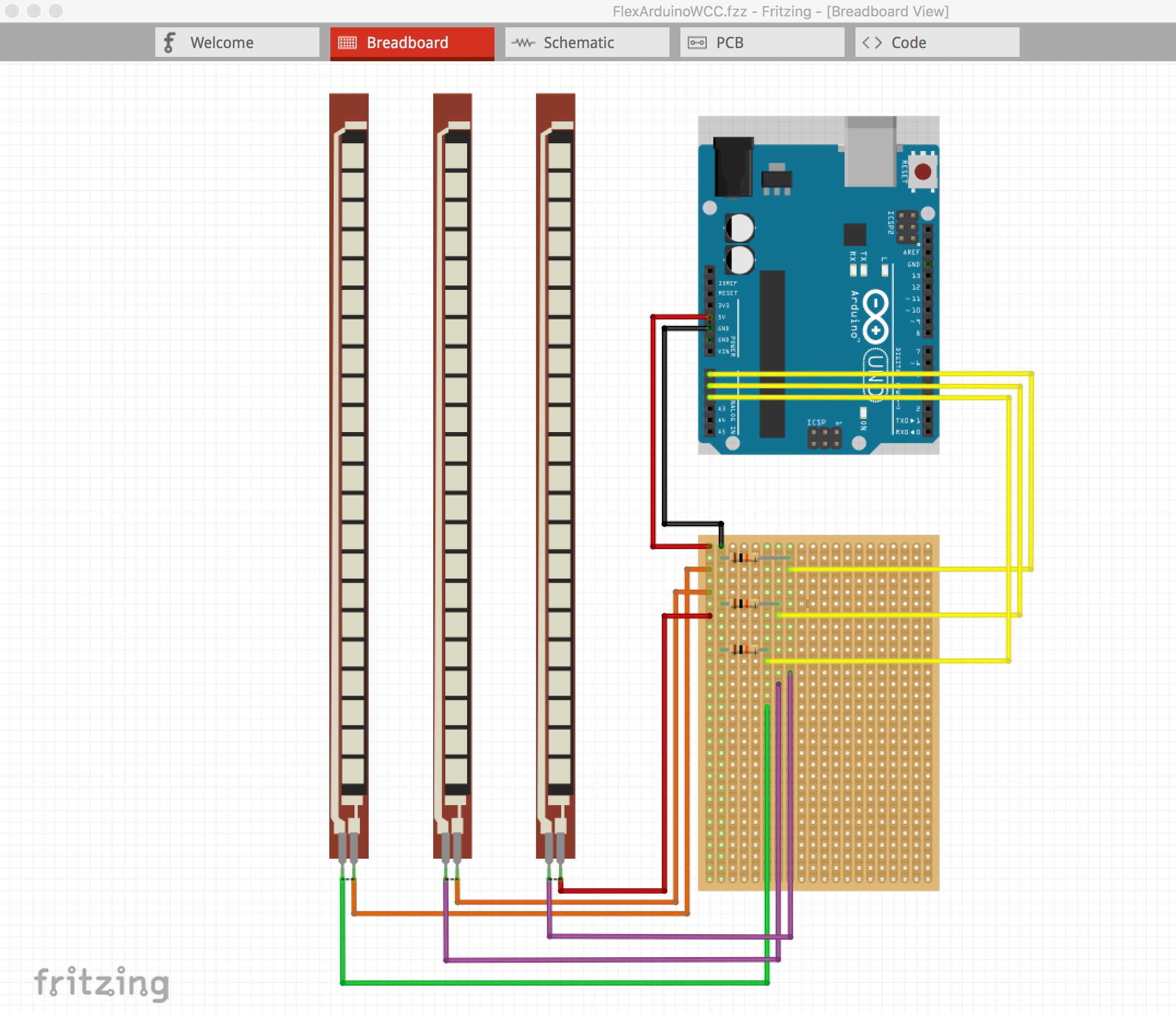

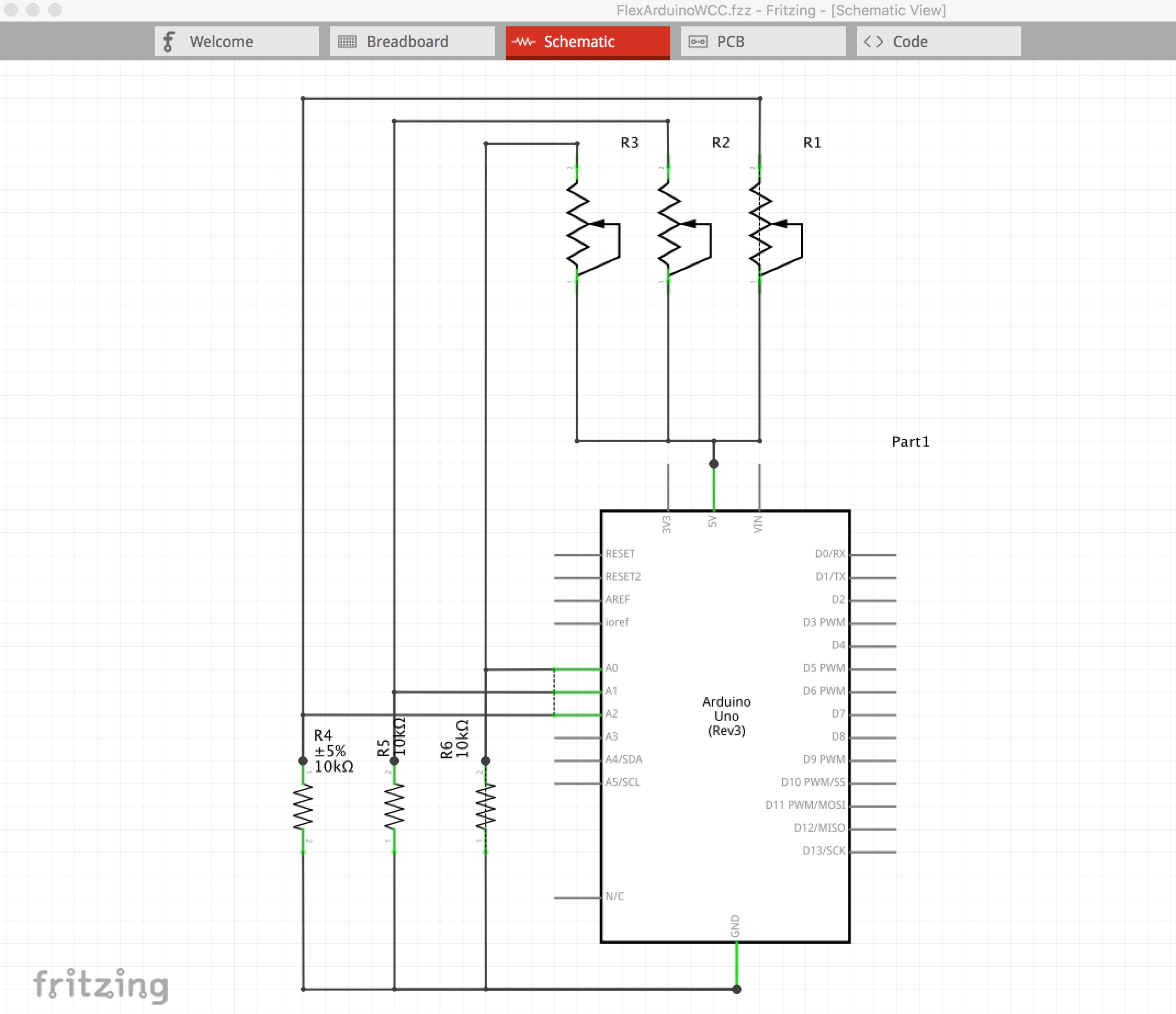

Flecks is an embodied audio manipulation tool created using 3 flex sensors, Arduino, Maxuino and Max/MSP. The most complex component of my piece was getting continuous resistance values from the flex sensors into Maxuino and then converting them into usable numbers in Max.

Reflection

I am really happy with how this project turned out in the end. It has been an interesting, experimental journey, however, I find the final product to be a really curious, intuitive and tactile tool for manipulating audio. It could be used with less polished pieces of music to create textural elements of a whole work, or could be used in performance or dance to accentuate the way movement is changing the sound. I have found it to be a lovely way to tangibly interact with my music and I look forward to further exploring the limits of this idea.

Areas for further development

It would be really interesting to add more flex sensors and expand the number of pieces and effects that this tool could enable you to explore. I can imagine it being a really interesting way to collaborate with a group of people. It would also be fun to add a visual element to emulate and indicate the sonic changes over time.

13th May Update

I have been working for the past two weeks on refining my Max Patch and exploring different ways to shape the pieces that I’ve created. The three songs I am using are all different but exist in the same universe, mostly made from different kinds of vocals — pitched and slowed, layered and textured. I am really happy with how they are sounding together and the flex sensors allow me almost to create new pieces each time by manipulating different elements. I decided to focus on time shifting and filtering using the flex sensors. I have thought a lot about implementing an API, however now that the concept of this project has changed, adding an API feels a little tacked on and not exactly in line with my objective, which is to create an interactive tool for embodied composition.

1st May Update

I have had the Maxuino patch working for a little while, experimenting with the ranges that the flex sensors are giving to create meaningful and audible change in the sound files I’m manipulating. Now that I am no longer using the conductive fabric, I have had to reflect on whether or not I would still like to use the Twitter API (or another API), especially with the theoretical framework I was interested in exploring earlier regarding sustainable fashion. At this point, I feel like I would be adding it for the sake of it rather than there being a real purpose, so I will wait to decide. Since using the flex sensors, the objective of my project has shifted slightly — I am interested in creating a tangible, tactile tool for sound manipulation.

16th April Update

The flex sensors are working really well, I soldered onto copper board and they are quite reliably giving resistant values. The next step will be to get the Maxuino example patch working to get the values from the Arduino into Max/MSP and have a look at the best way to scale and map them. The flex sensors are weird in that their values drop as you press them rather than increasing, so I will need to map them to a set of values that work for the time shifting and filter effects I’d like to explore in the Max patch. Also, a name change is in order considering the evolution of this project, so I will be calling it "Flecks".

3rd April Update

The conductive fabric has become very difficult to wrangle, even after soldering my Arduino connections onto the copper board. The fact that I can’t solder onto the fabric itself means that the resistance values are constantly changing when they move, even slightly — as a result, I recently decided to pivot and explore using one way flex sensors instead. These will hopefully have a similar feel as you press them and affect the sonic elements of the piece. I have continued to explore using different compositions in my sampler patch to see which might be worth using and building upon for the final.

24th March Update

The experiments with the conductive fabric have continued, I hooked both types up using alligator clips and a resistor to the Arduino to get a continuous resistance value into the serial monitor and it is working. I tried this with the Flora but it was much more volatile so decided to work with the Arduino. I have been working on a sampler patch that manipulates some pieces of music I wrote, mostly made from natural, pitched and affected vocals. I will be utilising this type of conceptual framework for the final patch.

15th March Update

I have been experimenting with conductive fabric for the past few days. I bough two types, conductive stretch fabric that stretches in one direction and conductive jersey that is completely stretchy. They are both working as a switch to turn on an LED with an Arduino and a Flora.

Password: conductive

MAX/MSP Project Proposal — Voice Box

19th February, 2018

Introduction:

My project will be an interactive site-specific installation, tentatively called Voice Box, which will play an atmospheric score composed entirely using the human voice. It will involve creating many vocal samples to form a vocal bed that is controlled using a Twitter API. To bring the project into the physical space, I will create a cube-like frame that will be covered in conductive fabric, which communicates with an Arduino (or other micro-controller), bringing in different aural elements when interacted with by the audience.

Aims and Objectives:

I hope this project will be intriguing, engaging and beautiful. I intend to use the Twitter API to focus on hashtags relating to fair trade fashion, an issue I am really passionate about. By using the Twitter API, I hope people will engage with the Voice Box, and then discover further layers of meaning, hopefully sparking thought and reflection about the inequality and destruction that the garment industry causes humans, specifically women in the Global South, animals and the planet. As I am using conductive fabric, I felt there was a link to the fashion industry, and an opportunity to pose questions. I am fascinated by the concept of Digital Witnessing, and through my computational arts research, I have discovered many ethical quandaries surrounding digital witnessing — particularly the geopolitics of privilege, the Western gaze, and how the obfuscation of information is perpetuated by companies, politicians and organisations that benefit from this obfuscation. There is power in making visible the things that corporations deliberately keep out of sight, and there is power in choosing to look at and act on things we would rather not see. I hope this work will give the audience a gentle opportunity to contemplate the impacts their choices have.

Technically, I hope to create stochastic and responsive elements that mean no individual’s interaction with the cube is the same. The work will evolve and surprise over time, particularly the elements controlled by the Twitter API, which will, by nature, be unexpected. This project will also be a fun musical challenge for me! I am extremely drawn to the human voice, and as a vocalist, it is a sound that I find innately emotive and human. I hope audiences will feel emotionally connected to the work through the music and their ability to control and interact with it.

Method:

The project has three main technical actors: the Twitter API, Max/MSP, and the Arduino taking real time data from the conductive fabric. All the vocals will be recorded and mixed in Pro Tools, added to Max as sound files, which will then be allocated to either the Twitter API stream or a stream for one of five functioning sides of the cube. The sound bed that the API conducts will be atmospheric, with layers of textural and melodic voices. The real time data from the conductive material on each side of the cube will control samples that have different timbral qualities — one more bassy, using pitched down vocals, one more light and rhythmic etc. It will be challenging to create module samples that all slot together like a puzzle, and I look forward to experimenting with this.

Project Plan:

Using conductive fabric is completely new to me, and will involve extensive exploration, especially in regards to connecting the pieces together and how best to utilise the data being parsed through the serial port, and what that means in the real world for triggering samples through Max. I think this is the area I will begin researching and playing with first, as my entire project hinges on the conductive fabric working smoothly.

The element I intend to work on consistently throughout production is composing a large volume of music for this project. The music will all have to be written as independent samples that can be layered in any and every conceivable way. Extensive trouble shooting will be the only way to verify that the music will be ear-catching, moving and cohesive.

Finally, the project will require quite a bit of research into utilising a Twitter API and parsing the collected data through Max. I will need to research which Twitter hashtags and accounts will provide the most fruitful creative data, which I expect will require heavy experimentation. Initially, my focus will be on hashtags such as #whomadeyourclothes, #whomademyclothes, #sustainablefashion, #ethicalfashion, however I imagine this may change as I become more familiar with and adept at using the Twitter API.

Production Schedule:

Weeks of:

19th Feb — Research how to use Twitter APIs and how it would be implemented in conjunction with Max. Research conductive fabrics.

26th Feb — Begin composing and recording vocal samples and start working on a basic framework for the Max patch.

5th Mar — Continue writing and recording vocal parts, flesh out Max patch and start to layer vocals through 5 different channels (to mock up what the cube sides would sound like).

12th Mar — Purchase conductive fabric and research how to connect multiple pieces and experiment with the data that arrives through the Arduino serial port. Think about how this could be mapped to the vocal samples effectively.

19th Mar — Devise a way to create a frame that will adequately stretch the conductive fabric but allow enough room for people to press down on it to create the real time data for the Arduino. Continue work on the Max patch and the vocal layers, continue researching Twitter API and how to integrate it.

26th Mar — Make a simple prototype of the cube to begin experimenting with the musical aspects of the work as well as writing and tweaking parameters.

2nd April — Start to implement Twitter API live stream data and experiment with different hashtags and other parameters to create desired musical effect. Finalise design of technical requirements: speaker setup and fitting computer into the cube.

9th April — Create a full sized prototype and working model to start implementing the tech setup, location of computer and Arduino, speaker etc. Continue recording vocals if necessary, continue working on Max patch to troubleshoot and get a sense of the finished project in physical and aural space.

16th April — Continue tweaking parameters in Max, Arduino and Twitter API settings to achieve creative objectives. Troubleshoot with technical setup and test interactive settings as much as possible.

23rd April — Delivery week, set up in space and troubleshoot.

Progress to date:

I have done a lot of research regarding the specific technical requirements for my piece, but will continue to do this throughout to make sure I have both a thorough technical grounding as well as an interesting theoretical framework.

References:

Chorus by Matthew Herbert:

https://chorus.wellcomecollection.org/

https://wellcomecollection.org/chorus

Harvest by Julian Oliver:

https://julianoliver.com/output/

Natalie Jeremijenko:

https://www.ted.com/talks/natalie_jeremijenko_the_art_of_the_eco_mindshift#t-145670

Melissa Coleman:

http://melissacoleman.nl/

Shiffman on Twitter APIs:

https://www.youtube.com/watch?v=7-nX3YOC4OA&t=493s

Eeonyx fabrics:

http://eeonyx.com/#explore-products

Contraband International:

https://www.youtube.com/watch?v=OYf9dY8LC6w

Adafruit woven conductive fabric:

https://www.adafruit.com/product/1168